It all sounds so simple. Just make a list of all the risks. Then you can start figuring out how to prioritise them and manage them in a comprehensive and visible way. Job done.

It all sounds so simple. Just make a list of all the risks. Then you can start figuring out how to prioritise them and manage them in a comprehensive and visible way. Job done.

This was how it seemed back in the early 90s when we took some tools that had proven quite effective for the management of hazardous plant. We started with the HAZOP which went through a plant and instrumentation diagram in a systematic way, applying a set of prompts and figuring what would happen as each applied a particular stress to the system. A team of blokes could do one plant in only a week provided they were suitably disciplined and engaged. We then discovered there a was a poor man’s version of HAZOP which was a bit less structured and thought we could apply that to anything. So the high level HAZOP of railways and retail outlets was born. We also pinched the likelihood and impact matrices that were used to categorise the risks a plant hazard caused to its personnel. It just took a bunch of process-minded, nitwit engineers – or maybe management consultants – to have the idea of creating a before and after PID and, voila!, we had some measures. Man, now we were really managing risk!

The next step was obviously to assign owners, create action plans, add a few half-formed ideas about escalating and delegating risk, risk appetite, and a few more, write it up in standards, use the notion of subjective probability to do some sexy quantification (well on second thoughts perhaps we’ll forget that subjective bit), put it all in a pretty database and call it ERM. Farewell to high level HAZOPS. Enter the big management consulting organisations and we have an industry.

Sounds good, but over the years as I’ve worked with this, I’ve felt increasingly unhappy. It’s not just the pseudo-scientific claptrap side of it that’s exposed by the description I’ve just given. I can deal with that. It’s the fact that every time I sit down to create or edit a risk register I do it with an increasing sense of gloom because I know I’ll spend hours agonising over the best way to do it, in spite of which I shall end up knowing I’ve failed. It’s the risk register that is the real worm at the heart of risk management and I shall not be happy until I’ve found a better way to articulate the risk profile.

First let’s take a slight deviation. My friend Matthew Leitch has a novel take on risk management which he calls “working in uncertainty.” On his website of that name you can discover his approach. Its main characteristic is an emphasis on actually managing risk rather than jumping though the ERM hoops. Matthew rightly identifies that all this activity can actually detract from working towards a better future in the face of uncertainty. His approach starts with the natural controls we all routinely put in place for risk and then invites us to think about what more we could do. He asks us to change our language and to adopt a much more natural approach. A review of working in uncertainty is planned for this site.

Matthew has a special page devoted to a critique of the risk register concept. He makes many valid points and he also provides some vague suggestions as to what to do about characterising risk for quantification. (In case this sounds critical, I readily admit they’re not as vague as I was forced to put in my book.) But this article is about my experience and while I’m on a similar hymnsheet to Matthew, it’s not quite the same one. I’m not so opposed to the ‘risk’-type jargon and I’m not so against the idea of listing the controls against the risks. So between us the song sounds pretty awful.

So what’s wrong with risk registers? Here are some symptoms.

- It’s difficult to decide what the risk event should be. Each event has a string of causes and a string of consequences. It’s not obvious which one should sit in the centre of the bow tie and any attempt to do this is bound to be arbitrary. Some people find the bow tie concept very useful, and indeed it helps, but that’s as far as it goes. The more general concept of a linked fault and event tree helps more, at least conceptually, but is only part of the story. The extent to which the trees are inconveniently linked across the system tells you something about the problem.

- Even when you’ve decided, it’s really hard to articulate it accurately so everyone understands and it’s comprehensive enough to cover al the things that aren’t covered by other risks. When reviewing a risk register people will gladly say, ‘well if you read it, it doesn’t cover that,’ (so they they don’t have to quantify ‘that’), blithely ignoring that ‘that’ has now disappeared into the ether, never to return.

- Risk registers grow indefinitely as you identify more and more features which are significant enough not to be forgotten about. This conflicts directly with managers’ wishes to keep it simple, stupid, ” I can’t manage more than 5/10/30 things at a time.” As they grow it is increasingly difficult to ensure the risks don’t overlap.

- Risks defy categorisation. Risk standards are full of well-meaning advice on how to do this. Clients love to ask for their risk broken down by geographical area, system, subcontractor, or whatever. You can humour them – even when you know they are about to embark on the ultimate folly of divvying up risk pots accordingly – but it doesn’t generally make much sense.

What’s going on here? What you are trying to do is characterise the possible future states of a system. The risk register approach tries to do this by categorising these states in terms of whether of not a specific event (the risk register line item, or more specifically, the risk event) occurs in that state. Put that way, it doesn’t sound a very good idea. If you think more directly about the components of the system you can see how the problems come about. Each component can be categorised several ways (as above) and each component can be broken down indefinitely. The system model becomes a series of hierarchies, each of which can be decomposed to an effectively infinite level of detail. You start thinking fractally. Each element of the model can interact with other elements of the the hierarchies in ways which are quite unstructured. I think the principal driver of the risk register problem is the fact this such models do not in fact aggregate.

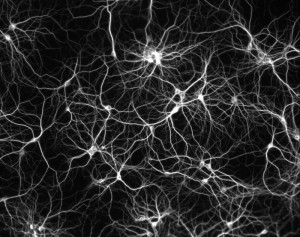

I’ll try to explain what I mean by this. Imagine an influence diagram. Each node reflects a characteristic or component of the system and the influences cause changes to propagate between the nodes. If there is an element of randomness in the node states then this will create risks which may propagate deterministically over the remainder of the network, or, more likely, will propagate in a way which contains additional randomness. It’s a big influence diagram so to make sense of it you try to group the nodes into supernodes. Each supernode has more complex behaviour reflecting all the nodes and influences within it. And it is linked to the other supernodes by ‘superinfluences’: complex pipelines which aggregate all the influences linking the various nodes in each supernode. This approach and its obvious generalisations seem like the only way to make progress in characterising risk. But it just doesn’t work. There seems to be no way in general which enables you to make high level sense of the low level detail. The devil really is in the detail. Understanding risk means understanding the minutiae of the system, not just looking at genetic risk categories.

I spent quite a lot of time some years ago thinking about an object-oriented approach to risk analysis. During this work I developed the concept of risk maps which are described in some detail on my company website. I might do a review/update here at some point. I think they help, but I have to admit they are not the whole answer.

So risk in a system is about simple things happening in the microdetail of the system. This is what makes complex systems so risky in general. The safety risk paradigm this article begins with works OK because it gets down to the right level of detail in a fairly simple system. These simple things propagate right across the system affecting the important outputs. You cannot aggregate and hope to understand the risks. It’s important to say that I’m not trying to be esoteric here. All I want to do is itemise the big uncertainties that exist when we embark on a project in such a way that:

- it’s a comprehensive list (of the big uncertainties defined in some way)

- it’s comprehensible

- it’s capable of being built into a risk model which can be quantified.

Let’s just have a look at one example. You want to know the cost risk for a project. The estimated cost of the project is built up on a cost breakdown structure. You can easily define a comprehensive list of risks by defining each one to be the uncertainty in one of the elements so the CBS. But the first real risk you write down is inflation. That affects all of them. The next one is delay. Well that’s caused by a host of other risks, not characterised by the cost uncertainties. The next might be bankruptcy. But you’ve got 10 key subcontractors. Can you treat them all the same (like we generally do)? And so on.

All of this shows risk workshops in a good light. They may be crude. (I’ll be recording recipe for running risk workshops here shortly.) But what they are really good at is picking out individual strands from the neurotic mess that is the risk model. So let’s stop pretending risks workshops are a way to write down all the risks. They do something different but just as valuable: they start to give us an insight into the sort of things that matter for that business or organisation or project. Since they are not comprehensive they are not necessarily much good for creating a risk model, but they do help us craft the model alongside other more comprehensive, but less insightful, techniques. Anything that gets us away from the idea of quantifying each line of a risk register with its Central Limit Theorem pitfalls can’t be bad.